Research activities are indispensable for corporate marketing efforts.

Research activities are indispensable for corporate marketing efforts. Among these, listening to and analyzing customer voices is particularly crucial. The key to marketing lies in how to gain "deep insights" from "many customers."

What if an "AI chatbot" could solve both these key challenges?

To advance such research methodologies, Dentsu Inc. and Dentsu Macromill Insight, Inc. have developed the beta version of "Smart Interviewer," a solution where a proprietary AI chatbot automatically delves deep into consumer insights ( release details here ).

The Smart Interviewer pilot yielded noteworthy findings. Compared to standard questionnaire forms, it significantly increased the volume of free-response answers and demonstrated substantial improvements in the quality of responses.

Based on these pilot results, Ryuya Mizutsu from Dentsu Inc.'s Business Co-creation Bureau and Nao Okita from Dentsu Macromill Insight, Inc.'s Business Development Department—who led Smart Interviewer's development—will discuss the benefits and key points of using AI chatbots in research activities, along with future prospects.

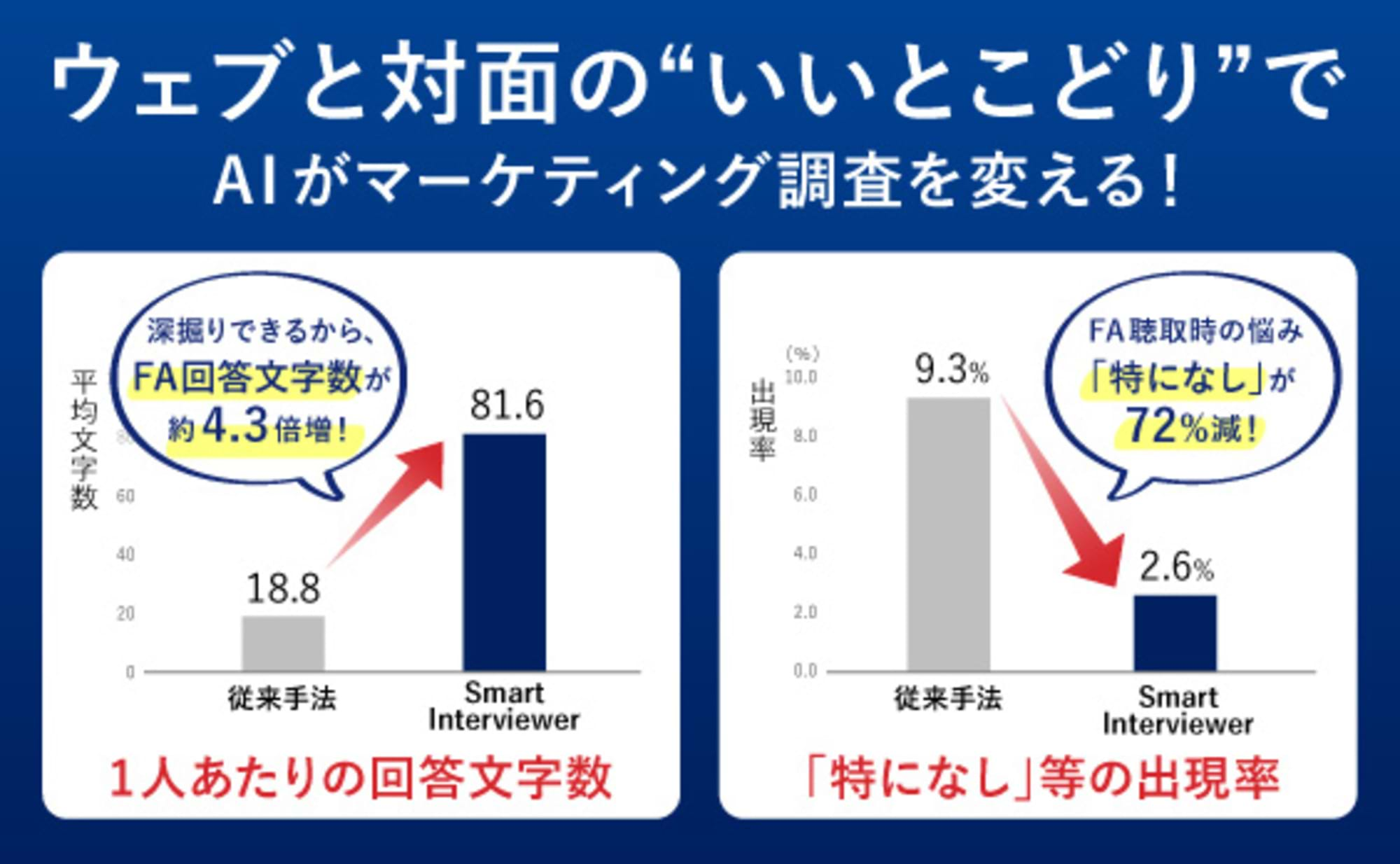

Free-response answer volume increased 4.3 times, while "None in particular" responses decreased by 70%!

First, Mizutsu examines the results obtained in this experiment, comparing them with "answers obtained from the free-response form of a typical web survey" to identify their characteristics and trends.

In the proof-of-concept experiment, Smart Interviewer was used to gather responses from 500 participants regarding their impressions of a beverage brand's advertisement.

Overall trends showed a 4.3-fold increase in per-person response length and a 2.3-fold increase in the number of response perspectives compared to conventional methods. Meanwhile, responses like "Nothing in particular" decreased by 72%, indicating improvements in both the "quantity" and "quality" of responses.

Changes were also observed in the types of content respondents were more likely to comment on.

For example, when asked about their impression of the advertisement, the most frequently commented aspects were "advertising expressions" like the talent or catchphrase. While opinions on advertising expressions are certainly important, for marketers, the more crucial point is whether "those advertising expressions actually connect to product features or purchase intent."

However, with the traditional response form, responses often ended with opinions or impressions about the more noticeable advertising expressions. Consequently, the combined percentage of responses that included mentions of the truly desired "product features" or "attitude changes among respondents" remained around 35%.

With Smart Interviewer, the AI prompts respondents who answered about advertising expressions with follow-up questions about "product features" and "attitude changes." This increased the proportion of responses containing these elements to 52%.

So why does Smart Interviewer manage to draw out responses in such a balanced way— ?

An AI-powered interview technique utilizing the "laddering" method

First, watch a video showing the interaction between the respondent and the AI.

As shown, Smart Interviewer analyzes responses and automatically generates the "next question to ask." The underlying program is designed based on a research technique called the "laddering method."

The laddering method is a technique for unraveling human consciousness or brand value through a hierarchical structure.

For example, in advertising impression evaluation, by pre-training the AI on the "desired hierarchical structure" and "anticipated response elements" as shown below, it can automatically select questions aligned with the laddering structure.

A key challenge during development was handling the "variability" in how responses are expressed and distinguishing between "synonyms" and "similar terms." For instance, even for a single response meaning "the talent was good," respondents might express it in various ways like "the performers were charming" or "the actors were wonderful."

To correctly map consumer responses to the appropriate "ladder (hierarchy)" and proceed to the next question, the AI must first accurately recognize these responses with their inherent "fluctuations."

This challenge was overcome by leveraging the technology of Dentsu Inc.'s proprietary AI Japanese dialogue engine, "Kiku-Hana" ( release details here ). Kiku-Hana employs the logic-based programming language "AZ-Prolog," which is optimal for natural language processing, understanding, and reasoning. It excels at grasping the meaning of diverse linguistic expressions even with limited training data.

"Natural Dialogue" to Draw Out True Feelings in AI Chats

The next critical point was "How to establish natural conversation with respondents and draw out answers closer to their true feelings?"

We conducted two proof-of-concept experiments, asking respondents in each, "Was it easy to answer?" and "Did you enjoy answering?" In the first experiment, only about half of respondents answered "Yes" to both questions.

Therefore, for the second experiment, we made UI improvements such as adjusting the AI's conversational style and responses, and changing icons, while incorporating feedback from Dentsu Macromill Insight, Inc. moderators.

As a result, even though the dialogue content itself remained unchanged, the percentage of respondents who answered "It was easy to answer" and "I enjoyed answering" significantly improved. (See figure below)

We also received numerous positive comments from participants, such as "It helped me organize my thoughts," "I could answer more efficiently," and "Since it's AI, I felt comfortable answering honestly." This demonstrated how small adjustments to the conversation and UI can significantly change evaluations. Furthermore, it suggests that the format where the AI asks questions sequentially while providing appropriate responses helped enrich the open-ended answers.

We believe this dialogue and UI design can be further developed by combining it with the creative strength that is a core strength of Dentsu Inc. Loop.

Key insights for marketers emerge from the response data!

In this chapter, we switch authors. Okita from Dentsu Macromill Insight, Inc. shares her impressions from actually using Smart Interviewer and feedback received from clients.

In typical web surveys, the method for collecting free answers often involves a single response box where participants write their thoughts in response to questions like, "Please freely share your impressions about [topic]."

To obtain richer free-response answers, one might consider providing 2-3 response boxes or presenting "example answers." However, the former often results in one-word responses, failing to reveal underlying reasons or context. The latter risks introducing bias through the examples provided.

Smart Interviewer resolves these challenges and acquires a sample size unattainable through traditional human-conducted qualitative interviews.

In this proof-of-concept experiment, we compared data collected using Smart Interviewer with data collected using a traditional single-box approach. We created maps using text mining tools to visualize the differences.

The data collected by Smart Interviewer shows an increase in the number of words appearing on the map and more branching paths.

For example, in ad evaluation, maps generated from data collected using traditional methods primarily focused on memorable ad expressions. In contrast, maps generated from data collected using Smart Interviewer show that data was captured within a single context, encompassing not only "memorable ad expressions" but also the "product image" conveyed and the resulting "psychological/attitudinal changes" (see figure below).

Furthermore, its use extends beyond proof-of-concept experiments to actual client applications. A representative from a beverage manufacturer who used Smart Interviewer for product concept evaluation provided the following feedback:

- Compared to the open-ended responses we typically get in concept evaluations, we obtained much richer descriptions.

- While most evaluations typically focus on rating the pros and cons of expressions in the presented text, the AI's deeper analysis revealed numerous words describing the perceived quality and benefits conveyed by the concept itself, and these were also confirmed in terms of the volume of responses.

- We found it to be an excellent solution that combines both quantitative and qualitative capabilities, enabling efficient hypothesis updating and verification when time is limited.

Thus, from both the research field and the client's perspective, Smart Interviewer delivers results that inspire satisfaction and expectation in terms of both the quantity and quality of responses.

Expanding application potential through AI data accumulation and creativity

In summary, Smart Interviewer's greatest strengths are:

- its ability to conduct interviews with a large number of people at once

- and its AI's ability to delve deeper into each individual's responses

.

By further expanding these benefits, we ultimately aim to evolve it into a new research method that combines the best aspects of both questionnaire surveys and face-to-face interviews.

To achieve this, we currently face the challenge of the time-consuming nature of AI training. Therefore, we plan to focus on accumulating a wide range of training data across diverse genres for the AI and on making the system more versatile.

The application of Smart Interviewer extends beyond the research domain. Its core technology, the "AI algorithm for deepening consumer insights (patent pending)," holds potential as a foundational technology to support various communication and marketing activities.

For example, we envision applications such as characterizing conversational AI, linking it with animation for consumer communication, or integrating it with marketing automation tools and recommendation engines as part of digital transformation (DX).

Since the public release of this service, we've seen increasing interest from companies requesting trials, and we plan to expand its functionality further.

For those of you looking to challenge new marketing approaches, why not join us in pioneering the AI era?

[Proof-of-Concept Experiment Overview]

Survey Overview: Impression evaluation of beverage brand video advertisements

Survey Method: Online survey (open-ended questions conducted via Smart Interviewer)

Sample Size: 500 respondents

Survey Period: December 2020

Survey Sponsor: Dentsu Inc. / Survey Implementation Agency: Dentsu Macromill Insight, Inc.